Reflections on The Curve

A compilation

We’ve already shared three posts about The Curve 2025, each by a different attendee. This final installment is a roundup of shorter reflections. Also: videos of the recorded talks are now live!

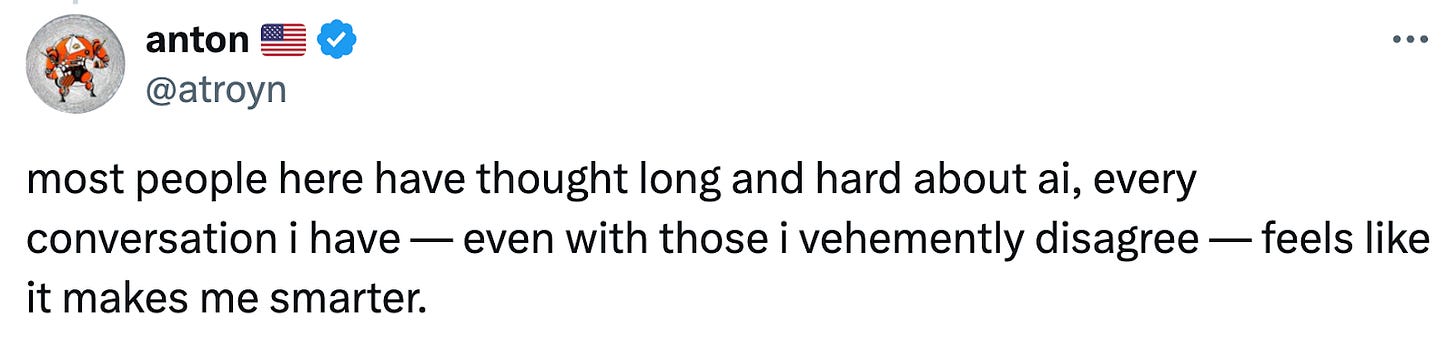

After the conference, we asked a few of the 350 attendees to share their perspective. Two themes emerged. First, as hoped, they came away with valuable new insights and relationships. Second, the specifics would have been impossible to predict in advance. (In the quotes that follow, bold emphasis was added by us, except where noted.)

Helen Toner

Interim Executive Director at Georgetown’s Center for Security and Emerging Technology

For me, the essence of the Curve was kneeling by a firepit, marshmallow skewer in hand, having two conversations at once. One conversation: learning from a world-renowned researcher about his unconventional approach to building safe superintelligent AI systems, and how his optimism about the approach had (I was surprised and glad to hear) been growing as he and his grad students had dug into it over the last couple years. The other conversation: haranguing a younger (but also brilliant) researcher about his (seemingly false?!) claim to me the previous evening that bears can be trained to juggle 3 balls, which I swear had a serious connection to AI timelines and capabilities, but I refuse to explain what.

Sam Hammond

Chief Economist at the Foundation for American Innovation

The Curve is a conference about transformative AI, which is a topic that can easily become abstract or overly speculative. And yet the sessions and conversations I had at this year’s Curve were all surprisingly concrete and actionable. As AI gets more “real” as a technology and the full societal implications start to come into view, The Curve functions as a kind of focus dial on policymakers’ binoculars. In essence, attending The Curve helps DC wonks see what SF technologists see, and thus develop the basis for joint intentionality.

The biggest update for me was realizing just how much these different groups still have to learn from each other, and how low the barriers are to effective dialogue. One might think conservative think tankers, Hollywood actors, labor leaders, AI safety researchers, and members of the technical staff would all speak totally different languages. But at The Curve, you can find them jointly grappling with the risks and opportunities of transformative AI in their fullness. It’s a sight to behold!

Bottom-line: The Curve sits at the Pareto frontier of professionally useful and pure fun, making it the single best AI conference in a super competitive field. It is easily the highlight of my year.

Jack Clark

Head of Policy at Anthropic

There’s a saying in politics called “the horseshoe theory”, which is that if you go to the far enough to the end of either political spectrum you end up with positions that are closer than those at the center. One thing I learned at The Curve is that this horseshoe theory might show up in an important way for AI safety. Very distinct communities (Bay Area alignment types, and the hard right) have a similar set of concerns (”AI systems might screw up society so much that we should be very careful in how they’re deployed”), meaning they could potentially form a coalition with one another. This, to me, illustrates what is so valuable about The Curve - it brings people together with radically different ideologies and worldviews and allows them to talk to one another until they identify surprising areas of overlap.

Speaking of horseshoe politics…

Joe Allen

Transhumanism Editor at the WAR Room

The Curve is an incubator for ideological mutants. The event is somewhere between a West Coast potluck and an artificial womb facility with rows of mind children staring out at you from bubbling vats. I arrived at Lighthaven expecting some sort of memetic promiscuity—perhaps a veritable orgy of psychic miscegenation—so I protected my brain with neural prophylaxis and tried to avoid thinking too much. Even with that tinfoil chafing my scalp, I managed to enjoy myself. Something about the vibe made learning irresistible.

Attendees consistently commented on the range of people they encountered:

Alexandra Givens

President and CEO of the Center for Democracy and Technology

My goal for The Curve was simple: to get more people to care about how increasingly personalized AI systems will impact people’s privacy. I view this as one of the most central (and overlooked) issues facing our field; with implications for people’s personal rights and freedoms, competition in the AI market, and the future of how we access information about the world around us. I wasn’t expecting to find such common ground with leaders from the right and left. It turns out almost everyone is troubled by the vast amount of information an AI assistant will know about you, especially once it’s connected to your email, your calendar, even the sensors in your home. And they get that when companies hold this data, governments seek to access it too – raising concerns for civil liberties and human rights around the world. There was wide agreement that we didn’t get things right in internet privacy, and companies and lawmakers will need to do better. So, we hold shared concerns. Can that translate to shared paths forward to address them?

Sabrina Ross

Privacy and AI Policy Director at Meta

Part of what makes this conference so special is how intentionally it brings people with wildly different perspectives into real conversation. In a single afternoon, I found myself talking first with someone convinced that the future of education belongs to AI agents – and moments later with the head of the American Federation of Teachers, leading the National Academy for AI Instruction, who’s deeply committed to keeping human educators in the driver’s seat.

The most piercing interrogations of AI aren’t coming from engineers, but from political scientists, educators, labor leaders, and ethicists – in deep conversation with tech innovators – asking what we’re really optimizing for – and who gets to decide what “alignment” means.

Over the course of the Curve I also realized that the so-called doomers and bloomers – those who see AI as an existential threat versus those who see it as a chance for extraordinary progress – often start from the same set of facts. Both recognize that AI is already reshaping everything from cybersecurity (where models like Claude are winning competitions) to education. What really separates all of us on this spectrum is our sense of pace, control, and trust. Even so, many of our solution sets are highly overlapping: For the gloomer, transparency matters because it’s the key to catching early signs of misalignment or danger. For the bloomer, transparency is what builds trust, adoption, and collaboration between people and AI systems. What struck me most at the Curve was how close these “opposites” actually are – rooted in a shared belief that the path forward is one built on openness and meaningful interrogation by those with expertise outside of tech.

Jedidah Isler

Chief Science Officer at the Federation of American Scientists

It was a delight to join in the vibrant and thought-provoking discussions that happened at The Curve this year. As a policy person, I appreciated the vast diversity of perspectives presented from across the AI research community, particularly as it relates to the AI scaling curve. ... For better or for worse, either scaling curve is far faster than the speed of government, so we can use spaces like this to figure out how best to pursue best outcomes with the technology that also produces best outcomes for our collective well-being, economic security and global competitiveness. I hope to come back to learn and share even more!

Randi Weingarten

President of the American Federation of Teachers

After my talk, I heard from a number of participants about new ideas regarding AI safety, which we hope to integrate into future trainings given to union members. Having participants with such broad expertise helped facilitate this kind of cross-pollination, which is so desperately needed. It was wonderful to hear other ideas about how we can help advance student privacy and AI safety, from experts within that industry particularly in light of the refusal of the federal government to protect our youth. I heard dozens of concrete ideas, from those working at AI companies and in civil society, for approaches that we had not previously considered. I’m looking forward to seeing what we can do together to implement these suggestions in trainings and policy.

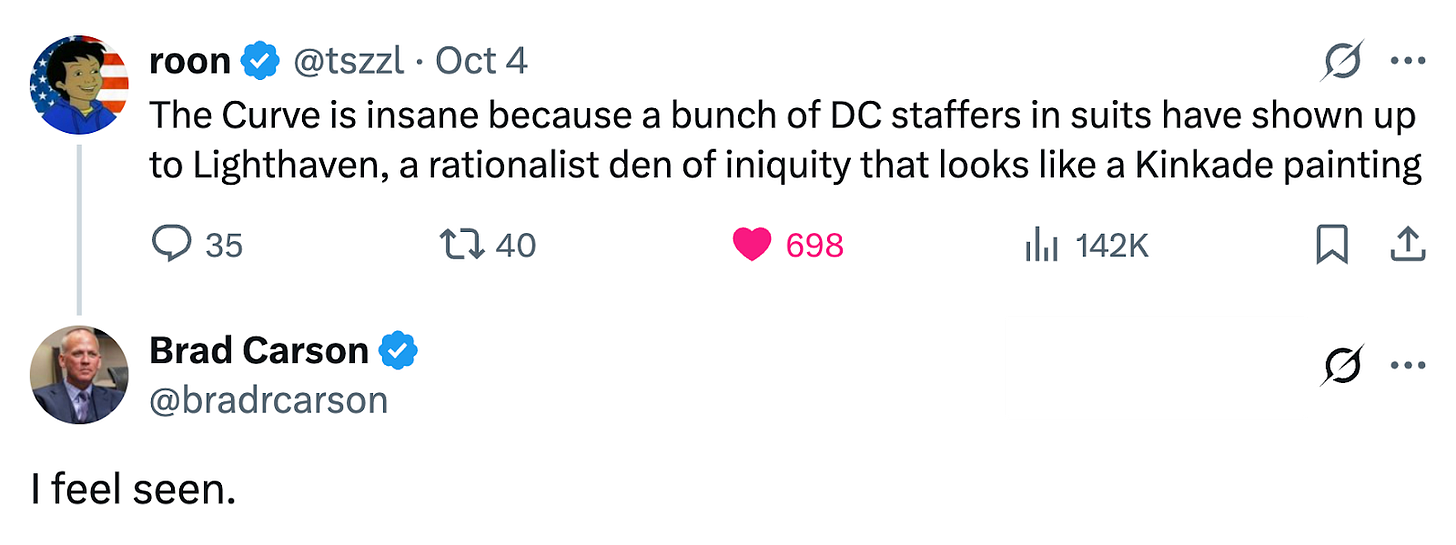

Other writing about The Curve

Bending The Curve – AI infovore Zvi Mowshowitz’s comprehensive review. “...every (substantive) conversation I had made me feel smarter.”

The Room Where AI Happens – in her newsletter, Concurrent, Afra Wang presents some central themes she observed. “This piece is written for readers outside the room—people without technical backgrounds, without AI insider status, who aren’t already ‘where it happens.’”

We’re all behind The Curve – Shakeel Hashim kicks off the weekly Transformer newsletter with some thoughts on the conference, and lessons that need to be shared with the broader world. “...the distance between The Curve and the rest of the world watching and waiting for a crash remains vast.”

Thoughts on The Curve – Nathan Lambert, a researcher at the Allen Institute for AI and noted analyst and proponent of open-source models, covers some things he learned.

AI Skeptics and AI Boosters are Both Wrong – Timothy Lee, a reporter who writes at Understanding AI, expands on what he sees as the core divide in the AI discourse.

Thanks to everyone who came and made it great! And to constructive critics like Boaz who keep us aiming higher:

Stay tuned for details on The Curve 2026!