Process vs. Product: Why We Are Not Yet On The Cusp Of AGI

You Can't Learn The Journey By Observing The Destination

How long until ChatGPT, Anthropic’s Claude, or another AI model achieves human-level general intelligence?

Before that happens, I believe several significant challenges will need to be addressed. I’m going to describe those challenges in a short series of posts, culminating with some thoughts on the actual timeline. In this first post, I’ll argue that current AIs are not well suited to the exploratory, iterative processes required for complex tasks, because they are trained on the final products of such processes, and not the process itself. We don’t show them writing and editing, we just show them books.

A Quick Review Of How Advanced AIs Are Built

I’ll use GPT-4 (the “large language model”, or LLM, underlying the most advanced version of ChatGPT) as an example to briefly explain how we train large neural networks.

Recall that LLMs perform one very simple task: predict the next word in a sequence of text. Give one “The capital of France is”, and it will answer “Paris”, not because it is specifically designed to answer questions1, but because that’s the obvious next word in the sentence.

To train a large neural network like GPT-4, we first create a random network – i.e. a network where connection strengths are set at random. To turn this useless random network into a well-tuned system, we perform the following steps2:

Send an input to the model. For instance, “The capital of France is”.

Run the model, to see what output it generates. Because it was initially set up randomly, it will generate a random output; say, “toaster”.

Adjust the connection weights to make the desired output (“Paris”) more likely, and all other outputs less likely.

Repeat this process literally trillions of times, with different input each time3.

To do this, you need trillions of different inputs. For each input, you need to know the correct output; or at a minimum, you need some way of distinguishing between better and worse outputs.

This explains why the most advanced AI system currently available is a next-word predictor. It’s not because next-word predictors are uniquely useful or powerful, it’s because we know how to come up with trillions of training samples for next-word predictors. Any collection of human-authored text can be used, and we have a lot of text available in electronic form. (If you’d like to learn more about this, see my earlier post, An Intuitive Explanation of Large Language Models.)

The success of LLMs stems from the fact that, fortuitously, next-word-predictors turn out to be able to do many useful things. People are using LLMs to answer questions, craft essays, and write bits of code. However, they have limits.

LLM Intelligence Is Broad But Shallow

I’ve previously written quite a bit about the limitations of LLMs. In brief:

Because GPT-4’s answers are polished, draw on a large repository of facts, and we tend to ask it the sorts of generic, shallow questions where its pattern library serves best, we are fooled into thinking that it is more generally capable than it actually is4.

and:

…missing capabilities [include] memory, exploration and optimization, solving puzzles, judgement and taste, clarity of thought, and theory of mind5.

In the three months since writing that, I’ve continued to see evidence that current LLMs are fundamentally shallow. Their impressive performance on an array of tasks says as much about the shallowness of those tasks as the depth of LLMs, and we are finding increasing evidence that many important tasks are not shallow. The number of useful real-world applications of LLMs is not growing nearly as quickly as anticipated; for example, LLM-generated document summaries don’t seem to be very good in practice. As OpenAI CEO Sam Altman tweeted back in March:

here is GPT-4, our most capable and aligned model yet. … it is still flawed, still limited, and it still seems more impressive on first use than it does after you spend more time with it. [emphasis added]

Some of the blame likely lies in the LLM training process, which places equal weight on the correct prediction of each word, regardless of that word’s importance. Suppose you train it on the following text:

What is the capital of France?

The capital of France is Paris.

When predicting the second sentence, it will consider the following two possibilities to be equally good, because they each differ from the training sample by just one word:

The capital city of France is Paris.

The capital of France is Rome.

In other words, the model is likely to devote as much energy to matching word choice, tone, and style as it is to getting facts correct. In fact, more so; in a recent interview, a Microsoft AI researcher noted that “reasoning” only comes up once every twenty or thirty words, meaning that only a fraction of an LLM’s training process actually provides feedback on its reasoning ability, and perhaps indicating that only a fraction of LLM computing time is used for serious thought. This helps to explain why LLMs are so much better at surface appearance than deep thought. Not only does the training process heavily emphasize word choice, it emphasizes learning to precisely match the particular style and word choice of any given sample of writing. Because we train LLMs to imitate their training data word-for-word, we are not just teaching them to write properly – we are spending massive amounts of compute to teach them to imitate millions of different writing styles.

I’ll close this section with a passage from a recent interview with Demis Hassabis (founder and CEO of DeepMind, the AI powerhouse that is now central to Google’s AI efforts):

[Ezra Klein] So when you think about the road from what we have now to these generally intelligent systems, do you think it’s simply more training data and more compute, like more processors, more stuff we feed into the training set? Or do you think that there are other innovations, other technologies that we’re going to need to figure out first? What’s between here and there?

[Demis Hassabis] I’m in the camp that both [are] needed. I think that large multimodal models of the type we have now have a lot more improvement to go. So I think more data, more compute and better techniques will get result and a lot of gains and more interesting performance. But I do think there are probably one or two innovations, a handful of innovations, missing from the current systems that will deal with things that we talked about factuality, robustness, in the realm of planning and reasoning and memory that the current systems don’t have. [emphasis added]

And that’s why they fall short, still, a lot of interesting things we would like them to do. So I think some new innovations are going to be needed there as well as pushing the existing techniques much further. So it’s clear to me, in terms of building an AGI system or general A.I. system, these large multimodal models are going to be a core component. So they’re definitely necessary, but I’m not sure they’ll be sufficient in and of themselves.

LLMs Learn The Destination, Not The Journey

As noted, next-word-predictors turn out to be capable of many useful things. However, just because LLMs can do some useful things that were traditionally difficult for computers, doesn’t mean they can be extended to everything people do. The LLM training process more or less amounts to letting the model read an enormous pile of text, and expecting it to develop an intuitive sense for what word will come next. The model doesn’t get to see anything about the writing process, it only sees the finished product. Some things, such as the capital of France or the structure of a valid English sentence, can be learned by simply observing a lot of examples of finished writing. Other things are very difficult to learn that way.

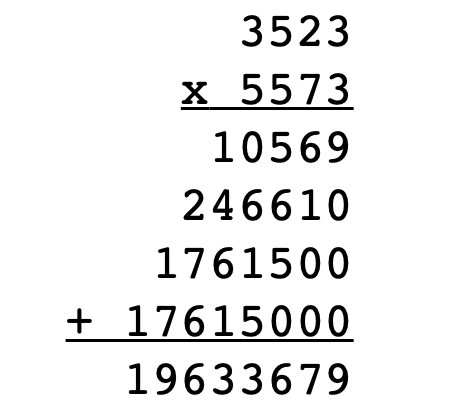

Consider long multiplication. When we teach schoolchildren to multiply large numbers, we don’t just provide examples (like “3523 * 5573 = 19,633,679”) and expect them to intuit a pattern. We show them an algorithm for long multiplication, and help them practice it.

LLMs aren’t taught any algorithms. The training data for GPT-4 probably included the Sherlock Holmes stories, but nothing about the process by which Arthur Conan Doyle devised their plots. Schools teach “creative writing”, not “stories” – stories are the product of the writing process, but the process is the thing that must be learned. LLMs see finished stories, but not the editing process; they see working computer code, but not the debugging process.

Is it any wonder that LLMs struggle with problems that require following an extended process before beginning to generate output? In ten attempts, the wunderkind GPT-4 never manages to correctly multiply 3523 * 55736.

The Next Step Is Not The Next Word

LLMs produce output step by step, one word at a time. People also work one step at a time, but the steps don’t always consist of adding another word to the end of whatever you’re doing.

Imagine that you’re writing a textbook, and you’ve gotten as far as creating the outline. You might then start writing each chapter, and you might indeed proceed one word at a time. However, at any moment you might choose to interrupt the word-by-word writing process. For instance, you might realize that the concept you’re about to explain depends on another idea that was slotted to appear later. At that point, your next step will not be to append a word to the draft; instead, you will pop up a level and revise the outline.

More generally, steps in your writing process might include:

Adding the next word to the section you’re currently drafting.

Reviewing what you’ve already written to see whether the stage is properly set for whatever you’re planning to write next.

Revising the outline to improve the sequence of concepts being introduced.

Doing some on-the-fly research to fill in a gap in your own knowledge of the concept you’re currently explaining.

Jumping back briefly to adjust an example you’d used previously, to better lay the groundwork for your current paragraph.

…and many other tasks, large and small.

All of this is just the part of the writing process that comes after you’ve created an outline. The process of creating the outline may be even messier. You’ll need to do research, assemble a list of topics, explore different ways of grouping those topics into a logical outline, and so forth.

Most complex tasks come with their own rich variety of incremental steps. For instance, debugging a computer program is practically an entire field of study in its own right. You might begin by generating hypotheses for where the buggy behavior could originate, and then apply the scientific method to systematically test those hypotheses. Or you might first modify the code to emit some information that might help you narrow down the problem. Often you’ll wind up jumping back and forth between different approaches, using insight from one to assist with another until you’ve located the bug.

To build AIs that can take on these more complex tasks, we’re going to need to find ways to train them on the sequence of operations needed to carry out those tasks. But it’s hard to find a database of one trillion steps in the process of, say, writing a novel. What might we do about that?

It Won’t Be LLMs All The Way Down

One popular idea for handling complex tasks using an LLM is to first ask the LLM to break the task up into steps, and then having the LLM process each step separately. For instance, rather than asking an LLM to write a textbook, we might ask it to create a chapter outline, and then invoke it separately to write each chapter.

I don’t think this “LLMs all the way down” approach will go very far. For a deep task, it’s usually not possible to specify all of the steps in advance. At any given moment, a problem or opportunity might arise which calls for jumping to a different subtask. Also, for a given step, it’s hard to prompt an LLM with all of the relevant input data and all of the options it needs to keep in mind. In the textbook-authoring example, the task of writing a single chapter might have to be specified along the following lines:

You are working on a textbook on the topic of X. Please write the chapter covering Y. You may reference the following concepts, which have been introduced in previous chapters: […long list of concepts…]. Please make sure to cover the following ideas, which we want readers to understand and/or which are necessary to set the stage for later chapters: […another long list of concepts…]. For consistency, please keep the following style guidelines in mind: […]. You should respond with a draft of the chapter. If you find that you don’t have all of the knowledge you would need to cover these topics, please generate a list of missing information to be researched. If you determine that it would be better to introduce some additional concepts in earlier chapters, please generate a list of such concepts.

This is drastically oversimplified, and even so it’s a lot to keep track of. Worse, this sort of complex, legalistic instruction doesn’t appear very much in normal writing, meaning that it doesn’t show up in the text that LLMs are trained on, and so I doubt we could expect an LLM to do a really good job of carrying it out. This – I believe – is why task-structuring tools like Auto-GPT have not yet resulted in much in the way of practical applications.

Do As I Do, Not As I Say

One approach to obtaining examples of the steps involved in performing a task is to observe humans performing that task.

For example, a recent blog post from Google Research describes a set of tools used internally at Google to assist programmers. Other coding assistants, such as Github Copilot, are trained on examples of finished code. The Google Research tools are trained on the sequence of actions programmers took while writing code. The results were quite interesting:

For example, we started with a blank file and asked the model to successively predict what edits would come next until it had written a full code file. The astonishing part is that the model developed code in a step-by-step way that would seem natural to a developer [emphasis added]: It started by first creating a fully working skeleton with imports, flags, and a basic main function. It then incrementally added new functionality, like reading from a file and writing results, and added functionality to filter out some lines based on a user-provided regular expression, which required changes across the file, like adding new flags.

Instead of trying to write an entire program in a single linear draft, the tool was able to follow a more natural, incremental process. This seems like an important step toward developing models that can tackle complex problems.

There are limitations to this approach. The Google model couldn’t see everything the programmer was doing, only their edits to the code file. If the programmer leaned back in their chair and spent five minutes thinking something through, it couldn’t see the steps in that thought process. It couldn’t see them taking and reading notes, consulting external information sources, running the program to see how it behaves, etc. A tool that isn’t trained on those steps won’t be well positioned to perform tasks which require them.

Another limitation of learning to model human behavior is that the model will be limited to human skill levels. ChatGPT is superhuman in its breadth of knowledge, but very much not in the depth of its thought processes. The same seems likely to apply to any tool which relies primarily on imitating human behavior.

Learning In Simulation

Another approach to learning a task is to start with a randomly initialized network, and repeatedly let it attempt the task, adjusting the network to encourage the actions it took on more-successful attempts and discourage the actions it took on less-successful attempts.

AlphaGo was the first computer program to beat a human professional Go player. Within two more years, it had progressed to the point of defeating the world champion. To accomplish this, DeepMind had to find training data that would enable superhuman play. From a Google blog post:

We first trained the policy network on 30 million moves from games played by human experts, until it could predict the human move 57% of the time (the previous record before AlphaGo was 44%). But our goal is to beat the best human players, not just mimic them. To do this, AlphaGo learned to discover new strategies for itself, by playing thousands of games between its neural networks, and gradually improving them using a trial-and-error process known as reinforcement learning.

In other words, once AlphaGo had learned everything it could from human play, it began playing against itself. Remember that to train a neural network, you need:

A bunch of different inputs.

A way of distinguishing between better and worse outputs.

In this case, each game constituted an input, and moves were considered to be good if they led to victory. Because DeepMind could play AlphaGo against itself indefinitely, they could generate as much training data as they liked.

Later, DeepMind created AlphaGo Zero, which did not use any data from human games; it was trained from the outset on games against AlphaGo and then against itself. AlphaGo Zero eventually defeated the original AlphaGo, and was noted for its “unconventional strategies and creative new moves7”.

This sort of approach has been used to develop AIs that can play almost every conceivable game, from chess to poker to video games such as Pong and Super Mario.

It’s not obvious to me how well this approach will extend to real-world tasks. Suppose we want to train a novel-writing AI. We can have it generate a million novels, but how do we evaluate their quality? Or suppose we want to train a code-authoring AI; can we find a sufficient range of tasks for it to undertake? How do we evaluate their correctness, legibility, security, and other criteria? Solutions to these problems probably exist, but may not come easily.

Tasks in the physical world, such as automotive repair or folding laundry, pose a different challenge. You could perform your training in the real world – building a bunch of prototype robots and letting them attempt the task over and over again. But this is messy, slow, and expensive. Or you can train the AI in a simulation, but that requires building an accurate simulation of things like the behavior of a rusty bolt or a rumpled shirt, which may be difficult, not to mention expensive in computer time.

It may be necessary to solve the training problem separately for each skill we want AIs to learn, just as we have training programs for thousands of distinct careers.

One last difficulty: for the sorts of training I’ve discussed in this section, it’s often not possible to provide feedback until the entire task is complete – for instance, when you can see who won the game, or the completed computer program runs correctly. You may only get one evaluation signal per task, as opposed to LLM training, where we get a separate evaluation signal for each word.

Things That Might Make This Easier

To recap: we’re able to build powerful LLMs, because we can assemble trillions of words of data on which to train them. However, because those trillions of words represent mostly finished products, the resulting model isn’t much good at the exploratory, iterative processes required for complex tasks. To generate the training data we’d need for complex tasks, we could observe human behavior, or we could let an AI repeatedly attempt the task under simulation conditions. Either way, gathering a sufficient amount of data will be expensive, and must be done separately for each task to be learned.

Here are some factors that might make the challenge of training AIs to perform complex tasks a bit less daunting:

Learning a specific task, such as writing a novel or diagnosing an illness, may not require as much training data as LLMs need to model every kind of writing there is. You may have noticed I mentioned that GPT-4 was trained on trillions of words, while AlphaGo and its variants were only trained on millions of games.

When we train a model on the process for carrying out a task, rather than only giving it examples of completed results, the model might not need as much training data to achieve competence.

Humans can often get to a given level of performance using far less training data than current neural networks. AlphaGo had studied tens of millions of games before it reached human champion level. I don’t know how many games a human champion will have studied or played, but ten games per day for 20 years would only be 73,000 games. Perhaps we can find a way to come closer to human-level training efficiency.

If you train a neural network on one task, that often provides a head start for teaching it another task, even if the two tasks are quite different8. By starting with generically pre-trained networks, we can reduce the amount of training data needed for each new task.

We can build models that rely on LLMs to handle some parts of a job. For instance, a code-authoring AI might use a language model to write documentation and code comments.

We’re Not On The Cusp of AGI

I believe that LLMs are a side track on the path to human-level general intelligence. To escape that dead end, I believe we will need to train models on the iterative process of constructing and refining solutions to complex tasks. This will require new techniques to generate the necessary training data and evaluation algorithms; we may need to develop such techniques for each significant task we wish AIs to learn.

Is there a scenario where we might quickly bypass this obstacle, and something like GPT-6 or GPT-7 emerges in the next few years and can reasonably be termed AGI? I don’t think so, but if things turn out that way, it will be for one of the following reasons:

We find a major breakthrough that allows computer models to learn more the way humans do, using relatively small amounts of training data. Perhaps we could then train an AI software engineer by literally enrolling the AI in a college computer science program.

In a few more generations, LLMs have become so powerful that they can in fact talk themselves step by step through a complex thought process, along the lines I dismissed in “It Won’t Be LLMs All The Way Down” (above). This might be helped along by adding relevant examples to the training process.

GPT-7 will have trained on so many examples of complex work products that it really will be able to just blast through tasks like “write a textbook on carbon capture techniques” or “find a more efficient algorithm for training neural networks”, internally performing some sort of deep pattern match on examples in its training data9. This would basically mean that there really is “nothing new under the sun”, i.e. that even when we spend years tackling a deep problem, at the end we will simply have rediscovered a remix of things that have been done before.

My intuition says that deep issues of computational complexity will render #3 unlikely. I’m also fairly skeptical of #2: I could imagine something like this emerging, but I think it would still require the LLM to go through a task-specific training process as described in this blog post; perhaps, by leveraging the LLM, we could reduce the amount of task-specific training required. #1 is the real wild card: if we could build AIs that learn like people, that would be a game changer. However, I don’t expect that to be a path for us to quickly reach AGI, because I think it would take more than one breakthrough to achieve.

In my next post, I’ll discuss another major gap between current LLMs and human-level intelligence: memory.

Actually, newer LLMs are a little bit trained specifically to answer questions, using techniques with names like “instruction tuning” and “RLHF”. But this is a minor feature of the overall training process, and doesn’t undercut the point I’m making here.

I’m glossing over a lot of technical details, but they’re not important for the points I discuss here.

Actually, sometimes the training process will consist of more than one “epoch”, meaning that each bit of training data will be used more than once. But this is a minor factor; you still need an enormous amount of training data.

This is a bit unfair; current LLMs have a particularly hard time working with multi-digit numbers, for technical reasons having to do with the way that text is converted into the “tokens” that these models process internally. The models aren’t able to naturally deconstruct numbers into digits, and therefore are at a disadvantage for tasks like multiplication. But multiplication is still a good example of a problem where just staring at a bunch of correct solutions is not the best way to learn.

Some search terms to learn about this are “pre-training” and “transfer learning”. The P in GPT stands for “pre-trained”.

This would be the ultimate triumph of The Bitter Lesson, which states that, to improve the capabilities of an AI model, one should increase the scale of the model, rather than trying to incorporate detailed human expertise directly into the model’s workings. That said, I’m not proposing that we incorporate human expertise, merely that we will have to switch to a different style of model. So I don’t think the bitter lesson contradicts my thesis.

Hey Steve, a friend of mine sent me this link-- I think you and your subscribers might find it interesting/amusing: https://nicholas.carlini.com/writing/llm-forecast/question/Capital-of-Paris

I continue to enjoy and read all of your posts-- thanks for laying things out so clearly.

One thing I can't quite get out of my mind, having followed computer chess since the early 80's, is how the response to the programs as they were improving seems extremely similar to what we see with LLMs. At first, the programs made laughable moves and were seen as a novelty. Then, they played moves about as good as an average club player, and everyone quickly (and correctly!) pointed out that the average club player isn't doing much more than calculating combinations, and computers are understandably better at that.

Then, the programs started playing at the master level, and as such they would occasionally make moves that, if another master saw the move without knowing it was made by a computer, that master would say it was creative or clever. But that was quickly dismissed as basically luck, a function of it having to pick some move, and the move it picked that was supposedly creative was just picked because the computer was trying to maximize its advantage. And that, too, was also true.

As computers progressed to the grandmaster level, the commentary about their play started to change. The fact that computers started playing undeniably clever and creative moves on a regular basis was attributed to the fact that it could do millions of computations a second and to the fact that it had such a clear goals of material gain and checkmate. No question that that was still true! At the same time, a kind of cynicism about human grandmasters became popular, that all but the top 100 or so weren't really very creative, they were just reusing known patterns from previous games in different ways. And since computers were often hand coded to recognize many of these patterns, it wasn't surprising that computers, being faster in the obvious ways, did better.

Which brings us to what I see as the key analogy with today and LLMs. Because once computers got to strong grandmaster level, lots of chess people began saying that chess programs would probably beat all but the best players, but that the programs had not shown anything original. They weren't going to be capable of new ideas. The car could beat the human in a sprint, but nothing was being learned.

What happened, of course, is that as computers got faster, the programs, as they got stronger, just invented techniques that humans hadn't previously seen. They were still just trying to maximize their advantage, but came up with "new" ideas as a by-product of seeing deeper. Especially in the realm of speculative attacks and robust defense, humans learned there were new possibilities in positions they had previously ruled out. It is true they often could not copy the computer's precision, and thus couldn't always utilize this new knowledge, but they knew it was true, and it changed expert humans' approach, especially to the opening and endgame.

And once AlphaZero and other neural nets tackled chess, where they were not being programmed with human knowledge to build upon, but were learning and teaching themselves, they introduced other new ideas to experts that, this time, humans could emulate a bit more easily. In Go, even more so-- the human game has been revolutionized by the new ideas AlphaGo demonstrated.

So while I (think I ) understand your point that the real world-- writing a novel, solving an original programming problem, writing a non-generic analytic essay that has original insights-- doesn't have clear ways to evaluate quality and to learn and improve, and may thus be non-analogous to Chess/Go/etc., I do wonder how much a sheer increase in scale may be the big difference after all, a la "The Bitter Lesson".

Put another way, sometimes I feel like we are looking at GPT-4 much like we might look at a chimpanzee. Surprisingly smart, but limited in important ways. But how is our brain fundamentally different from theirs? Perhaps, to keep the analogy going, that our brains have one or two advances, like transformers with LLMs, that allowed ours to develop abstraction and language far beyond the chimps. Or did those advances just "appear" because of increased scale or development through more "training data"? I wish I knew the slightest thing about such topics.

OK, thanks again. I'm looking forward to reading your next entry on Memory!