First, They Came for the Software Engineers…

What 25 interviews tell us about AI's messy impact on tech work

If you follow the tech labor market and/or AI tools for engineering, you saw some shocking stats last month:

Dario Amodei, CEO of leading AI model developer Anthropic, told the Council on Foreign Relations that before the end of this year, AI could be writing 90% of all code.

Gary Tan, head of Y Combinator, said that in one quarter of Winter 2025 YC startups, 95% of the code is written by AI.

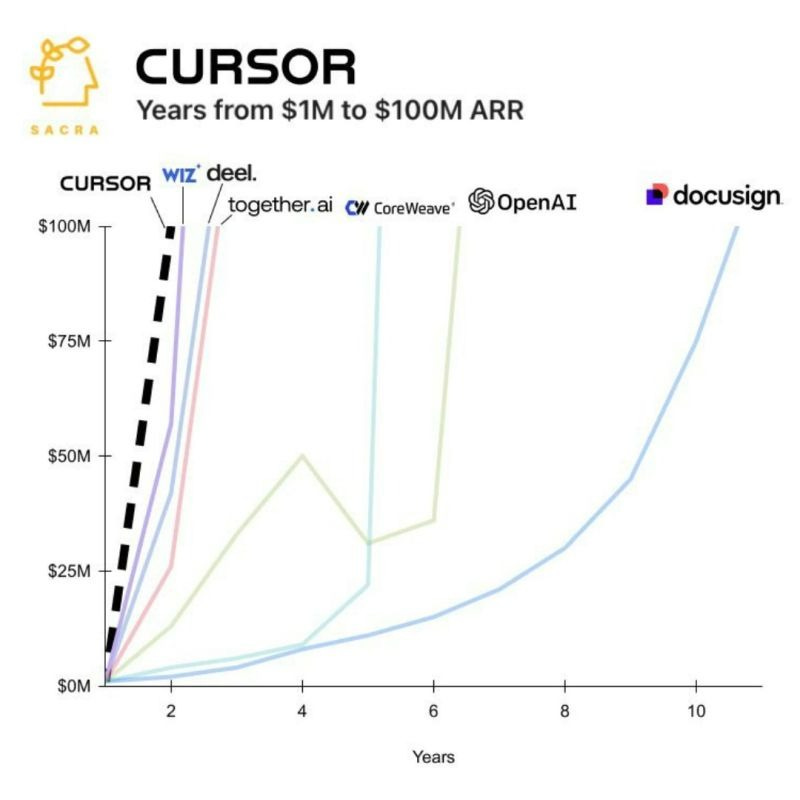

The Information reported that Cursor, the hot new AI coding startup, has surpassed $200 million ARR, implying over half a million paid subscriptions1, and presumably millions more free users.

Meanwhile, the tech labor market has continued to soften. Last year saw over 150,000 employees downsized at tech companies large and small, and unemployment in the tech sector rose to 2.9% in January.

We recently hosted a dinner on the nature of knowledge work, where a participant pointed out that the jobs that AI companies are most familiar with are… the jobs of people who work at AI companies.

So, we asked ourselves: Should we expect that those jobs will be among the first to be automated by the new wave of AI? Is the softening of the tech labor market an early warning sign, and is the fast adoption of tools like Cursor a cause of that very softening?

Silicon Valley has always prided itself on disruption—creating new technologies that reshape entire industries. But as the saying goes, what if this revolution is eating its own? Will tech workers themselves be some of the first casualties of a global AI-driven jobs crisis?

Why would tech jobs be automated early?

I see four compelling arguments suggesting that engineering and other technical jobs might be particularly susceptible to the first waves of AI disruption:

AI companies understand the problem space: AI labs are staffed by engineers solving problems they know intimately. Unlike building AI for medicine or law, developing coding assistants requires less external domain expertise, potentially accelerating progress. And there’s a rich history of coders building tools to accelerate coding. As the old saying goes: “I’d rather write programs to write programs than write programs.”

Relatively clear success metrics: It’s relatively easy to evaluate whether code works, compared to outputs in many other fields. (This is particularly easy in the competitive coding space, which might be why AI tools are already so good there compared to real-life engineering tasks.) This clarity allows for easier generation of training data, automated evaluation, and tighter feedback loops for improving AI models compared to domains with subjective success criteria.

Reams of available training data: There are not large, free online datasets of, say, the workflows and outputs of event planners or virtual personal assistants. But there are massive open-source datasets of code – much of it well-commented, with clear explanation of why the authors made the choices they did! – that can be used for training models on engineers’ jobs.

Incentives for recursive improvement of AI research: Leading AI labs are intensely motivated to automate their own workflows to accelerate research and development, with the goal of creating a potential feedback loop that speeds up the creation of more powerful AI.2

If you’ve read the recent AI 2027 scenario laid out by OpenAI whistleblower Daniel Kokotaljo and collaborators, you’ll recognize #4 as a key dynamic in their vision of the future.

Is AI already driving job loss in tech?

Is AI already responsible for significant downsizing in the tech sector? Or will it be in the very near future? Reasonable people seem to disagree quite strongly on these points.

In an effort to get a clear picture of the current state of affairs, we (Steve and I) talked to over 25 engineers, managers, and adjacent tech workers to try to get a picture of how AI is changing the tech labor market right now – and how they expect it to in the near future.

Below are my key takeaways and reflections from these interviews, grouped into three categories. But first, an important caveat: Most of our interviewees work within the tech sector itself, even though the majority of all engineering jobs are inside of companies in other industries. I assume that the impacts of AI on engineering will be felt first inside of true tech companies, who are naturally early adopters of new technologies – and that patterns in the tech sector can be read as portents for engineering teams in other sectors. But there may well be other relevant trends we miss taking place in other industries.

1. AI has not (yet) fundamentally transformed most tech jobs

Based on our research, some common narratives don’t hold up:

AI is not the primary driver of current tech market softness: While AI is clearly a factor influencing future plans and some current decisions (especially around junior roles), the strong consensus points to macroeconomic factors (interest rates, post-pandemic correction) as the dominant force behind layoffs and hiring slowdowns. One particularly compelling datapoint came from a conversation with a coding bootcamp, who pointed out that the job market for engineers is just as soft right now even in industries where staff are explicitly banned from using AI tools, like finance.

AI rarely delivers massive productivity boosts today: Dramatic gains are real in some cases, but are highly task-specific and user-dependent. Typically, large productivity boosts occur for small, well-defined, greenfield projects, or when an engineer is first learning a new language or API. For other work, gains from using current AI tools are often far more modest – and potentially entirely offset by increased time needed for review, debugging, integration, and managing AI quirks. Moreover, no one spends all of their time coding – and AI doesn’t (yet) substantially reduce time spent developing specs, on QA, in meetings, etc. So, overall job productivity boosts are generally much smaller than the headline numbers.

It is not the case that all – or even most – successful or competitive tech companies are already using AI widely internally. Partly this is because, overall, AI is still not massively transformative for coding. In most cases, a great engineer without AI still seems to be significantly more valuable than a less-skilled engineer with AI. Partly, it’s because adoption and organizational change take time, even for transformative technologies. But also, there are many cases where AI isn’t really yet transformative at all. For example, one startup founder we spoke with is building apps for a niche platform with relatively little data (online resources and open-source code) for AIs to train on. AI tools simply aren’t very good at writing code in that environment. (Yet.)

2. However, AI is contributing to acute pressure on entry-level hiring

Many people we interviewed said that their company (or companies they work with) have practically frozen hiring for junior engineers and data analysts. From the supply side, we spoke with coding bootcamps that are shutting down, and heard that university CS majors are falling as job prospects diminish.

Why? Well, for one, general softness in the tech labor market means it’s easier to hire experienced engineers than it has been in a long time. But I don’t think that’s the whole story. Based on our interviews, it seems that AI probably is a major factor in the massive drop in entry-level hiring.

Here’s why:

“Code monkey” jobs are disappearing: Time spent on purely routine coding or data querying tasks is rapidly diminishing. As one senior manager at a Big Five tech company told us, ten years ago, being able to write good SQL on a whiteboard during an interview was a valuable differentiator that could get you a job. No longer. The automation of these routine tasks means it’s not clear how entry-level staff can add value quickly.

Hiring managers expect AI coding capabilities to expand quickly: One mid-level engineering manager at a ~200-person tech company told us that he doesn’t think AI is actually making their team more productive right now, but he thinks his bosses’ expectations of future productivity gains have changed their hiring priorities. His C-Suite expects that in the next year AI will be able to do the jobs of entry-level staff, even if it can’t already. Junior engineers have always been long-term investments; if AI will be able to do their work in 6 months, why invest?

AI increases the value of senior engineers’ time. One of our interviewees at a big tech company told us that experienced engineers are relatively more valuable now because they have the ability to look at a large chunk of not-quite-right code generated by AI, quickly figure out what’s wrong with it, and fix it. (Perhaps as they used to with the code generated by junior engineers?) Meanwhile – like most junior engineers – current tools aren’t good at overall software architecture, product mindset, managing technical complexity, systems thinking, and just plain knowing how to connect the pipes between big sections of a code base. Senior engineers are good at all of these things, especially with a code base they’ve worked on for a long time.

Taken all together: If AI makes senior engineers more valuable than ever, and junior engineers less so – a trend which you expect will continue – and meanwhile for other reasons the job market is more employer-friendly for senior talent than it has been in a long time… why would you waste your senior engineers’ time in training and onboarding entry-level hires?

3. AI blurs functional lines

Even if the tech industry continues to employ as many people (or more!), AI is beginning to change the nature of those jobs and the skillsets that are most valuable. It’s easy to imagine a future where product teams consist of a different mix of types of people, with fewer pure engineers.

AI (currently) helps semi-technical people the most. The biggest productivity gains reported from among our interviewees were from people who are not actually engineers by job title, but have enough technical knowledge that they can now use AI to do small coding tasks with less (or no) engagement from an engineer. A product manager might now be able to prototype or even implement basic features, or build small internal tools themselves. One of our interviewees worked as an engineer about a decade ago, but then moved into non-technical roles. He is now routinely building websites and tools for his projects that he would never have built without AI. “I know enough about coding to know what to ask for and how to evaluate code written by AI, but given my rustiness I never would have put in the time to write the code on my own.”

AI empowers everyone to be a generalist and rewards a product mindset. Traditional tech teams have product managers, designers, and engineers. AI blurs those lines and enables more cross-functional role descriptions. PMs and designers can do light coding; PMs and engineers can create functional design prototypes. We heard from several people who say the ratio of PMs to engineers in their companies is increasing because features can be built faster, or that engineers with product vision are now more valuable than ever before.

Data analysts specifically may need to learn broader skillsets to survive. Data analysts used to spend a lot of time writing SQL queries or equivalent for executives and product managers. As basic data analysis gets automated or democratized, we heard from senior data staff at two companies that their existing cohort of data analysts are transitioning into roles that more closely resemble a traditional data engineer (data pipelines, debugging, etc.).

How’s this all going to play out in the near future?

Let’s circle back to Dario Amodei’s prediction that by the end of 2025, more than 90% of all code will be written by AI.

Is this plausible? I suppose, if you include scenarios where AIs are generating massive amounts of junk code for freelancers or at a few weird organizations. But I’m going to take those as against the spirit of the exercise.

Otherwise? I don’t think so.

First of all, in general, there are still quite a lot of obstacles to building AI tools that can take over human jobs end-to-end. E.g., as Steve has covered elsewhere, AI tools are much worse than competent humans at managing complexity, they have no long-term adaptive memory, and they’re bad at metacognition and dynamic planning. The most rigorous study we know of, by METR, seems to suggest timelines of 5-10 years before AI can reliably do realistically messy engineering tasks that take a skilled human engineer about a month. (Though that’s based on a linear fit. Recent model releases hint that progress might be trending upwards faster than predicted by the original paper — already ancient 6 weeks after release, of course...)

Second, our interviews suggest that even among the early adopters in the tech industry itself, behavioral change can’t happen that quickly – despite the impressively fast adoption of tools like Cursor. In other, more conservative industries, change will take even longer.

Now, if some major technical breakthrough is right around the corner, and full-blown ASI takes over the world this fall, all bets are off. (Maybe Amodei knows something I don’t!) But assuming not, here are some of my predictions about AI and the technical labor market in the next few years.

Four near-future predictions, barring AGI

QA will be an important frontier for AI agents. The ability for AI agents to conduct QA (Quality Assurance) will be a focus of agentic development in the coming year or two. In the meantime, by accelerating coding before QA, AI tools will create a QA bottleneck. As tools that can use computer interfaces like OpenAI’s Operator and Claude Computer Use improve, a large market will emerge for AI agents that can supplement or replace human QA – and those tools will in turn make AI-powered coding tools more valuable.

"AI Debt" will become a recognized challenge: The ease with which AI can generate code, especially when used without sufficient oversight, carries risks. Managing the resulting "AI Debt" – issues related to code maintainability, testability, security vulnerabilities, and a team's reduced understanding of its own codebase – will emerge as a significant challenge within the next 3-5 years, potentially creating new types of specialized work but also tempering the net long-term productivity benefits.

Adaptation will be non-negotiable: A small handful of the managers we interviewed have already laid off engineers who were uninterested/unable to adopt AI tools quickly. Our sense is that for now, this isn’t a dealbreaker in most companies yet – but it will be soon.

If something remotely resembling the status quo plays out for longer than a few years, then I’d make a fourth prediction:

The talent pipeline will dwindle: Many people we spoke with believe that entry-level hiring will no longer be worth it for most companies, but that experienced engineers will remain valuable for a long time. (Perhaps forever?) None of them had an answer to the obvious concern: Where will your experienced engineers come from? How do you train people into the role of an AI-empowered senior engineer without them being paid for years as junior engineers? I predict that the industry will go for a few years without a solution – but that if engineering jobs remain in anything like recognizable form in a decade or so, the industry may face a talent crisis.

What about the longer run? Will engineering still be a job?

Who the hell knows, is the short answer.

The longer answer is: We have heard three competing hypotheses about how the tech labor market will play out.

1. The Jevons Paradox theory: Demand for engineers will skyrocket.

The Jevons Paradox describes how technological advancements that increase the efficiency of resource use can paradoxically lead to increased consumption of that resource. I.e., when something gets cheaper, you might not just buy more of it quantity-wise, but actually end up spending more total money on it.

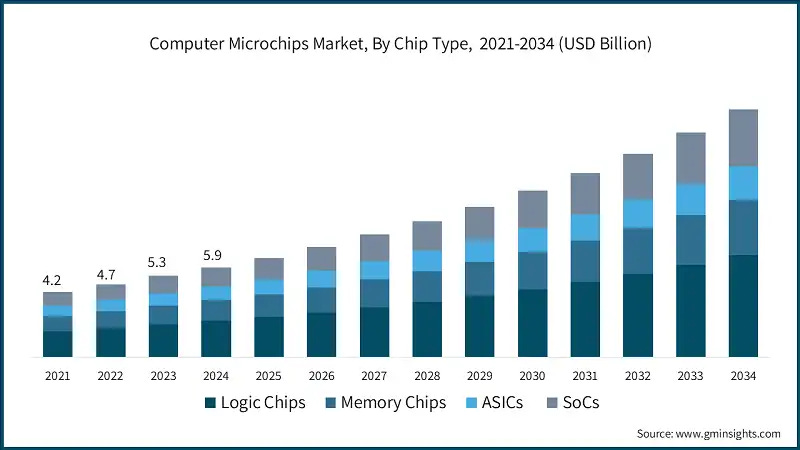

For example, as compute power gets cheaper – as computer chips get more efficient – obviously we use vastly more total compute. So much so that we also increase total expenditure over time on compute hardware.

Applied to software engineering, this theory suggests that because software creation has historically been supply-constrained, AI will dramatically expand the scope of what's considered possible or worthwhile to build. Lower costs could enable countless new applications, features, and custom internal tools that were previously unviable. This explosion in the demand for software – for more features, more integrations, more niche applications, more frequent updates – could outpace the efficiency gains, leading to a net increase in the total demand for engineering work needed to design, guide, integrate, test, and maintain it all.

Overall, under this theory, there will be even more engineers, though the nature of their work will shift towards higher-level design and oversight.

2. The Battlestar Galactica theory: All this has happened before; all this will happen again.

Some people just think that AI is not really a huge deal in the grand scheme of things. The most compelling case I heard for this theory came from an experienced engineer who suggested that everyone should, to put it bluntly, go read a damn history book.

She pointed out that every 10 or 20 years there’s a new major layer of abstraction introduced into the way engineers code. First, people coded directly in machine code or low-level assembly language; then came higher-level compiled languages like C, which automated away direct hardware manipulation; then object-oriented languages added another layer; then managed languages with automatic memory management and garbage collection (like Java or C#) eliminated huge amounts of manual effort and common errors; then came sophisticated frameworks, cloud abstractions, and dynamic scripting languages.

Each time, tasks that previously required significant expertise were automated, the nature of engineering work shifted, and new complexities and opportunities emerged. From this perspective, AI code generation is simply the next layer of abstraction, likely to follow the same historical pattern of disruption, adaptation, and eventual stabilization rather than representing a final endpoint for engineering jobs.

3. The full automation theory: Engineering jobs will be among the first to go

Or, if you believe that AI will eventually replace large swaths of current jobs, tech jobs could be at the top of the list — as discussed above. This could look like mass unemployment. Or the economy overall could stay at full unemployment, by people moving from engineering into other jobs that we are differentially better at than AI.

So what do I believe?

My second-grader loves math and engineering. Should I push him towards a coding career, where he will ride a wave of unprecedented demand for human creativity spurred by cheaper code? Or at worst, simply adapt to the next layer of abstraction, like software engineers always have?

Or instead of robotics summer camp, should I send him to theater camp? After all, as long as humans have any economic power, we’ll probably at least place a premium on human performers – but maybe he’s got no chance in a cognitive race against hyper-capable AI?

Right now, planning for his future feels less like charting a course and more like placing bets on which history – or prophecy – will win out.

I think all three of the paths I describe above are possible for the next few decades, and depend on great unknowns in how AI capabilities will develop. But if I had to bet, I think I’m most inclined to bet on a version of Scenario #3: Even if AI doesn’t cause mass unemployment overall, engineering jobs decline steeply. The AI revolution might just eat its own.

Cursor’s lowest-tier subscription is $240/year. $200 million/$240 = roughly 830,000; assuming some people are on the Business plan, it seems like 500,000 is a good estimate of the # of subscribers. One estimate (take it with a grain of salt!) suggests there are ~30 million software engineers in the world; if that’s the right ballpark, then Cursor has already converted a very sizeable fraction of the total addressable market.)

Leading AI labs are probably most directly incentivized to increase the productivity of ML (machine learning) engineers and researchers. But improving general engineering productivity is also beneficial to their work – and would be a natural byproduct even if they were focusing on automating ML workflows.

While storytelling is a very useful skill in most jobs (and life in general), making a living as an entertainer per se is brutal.

The integration of AI into the SDLC presents a significant opportunity to enhance efficiency, accelerate innovation, and drive business value. To fully realize this potential, organizations must adopt a disciplined and strategic approach that navigates a complex landscape of new considerations. Success requires moving beyond simple productivity metrics to understand the full Total Cost of Ownership and the nuances of the "productivity paradox".

It involves strengthening engineering discipline to manage new forms of technical debt and implementing a "Zero-Trust" security posture to address novel vulnerabilities inherent in AI systems.

Furthermore, a human-centric strategy is essential to foster skill development and maintain collaborative team dynamics, while a robust governance framework is needed to manage the evolving legal and ethical considerations of intellectual property and data privacy.

Ultimately, a mature, "AI-disciplined" strategy, built on executive-led governance, rigorous verification, and the augmentation of human expertise, is the key to transforming AI's promise into tangible, sustainable results.