What Will AI Do For Us In The Near Term?

The more time I spent writing this post, the more optimistic I became

I’ve spilled a lot of virtual ink talking about the potential downsides of AI development, and I’ll be spilling more. Today, I’m going to review the upsides.

I haven’t written much about the potential benefits of AI; the myriad commercial enterprises working on AI platforms and applications are doing a pretty good job of that. Also, to be frank, I was having a surprisingly hard time getting excited about the topic: I’ve read too many articles about automated e-mail campaigns and artificial friends, thanks-but-no-thanks. But the positive case is helpful context for other topics I’d like to cover, and it will give me a chance to discuss both the scope and limitations of what AI might offer. And the process of assembling this post left me significantly more excited about the upsides of AI (while still concerned about downsides).

I’m going to discuss things which seem feasible based on a fairly straightforward extension of existing technology and research. I will not be discussing powerful general intelligences, robots with human-level dexterity, or other more exotic futures – the world I described in Get Ready For AI To Outdo Us At Everything. That world will be so different that it doesn’t really fit into the same discussion as nearer-term applications.

What does “nearer-term” mean? My friend David Glazer commented: “My guess is the fat part of the curve for the benefits described here is 3-7 years out – there will be earlier wins, and the value will keep growing for a long time, but I think most of the stuff we can see now on a relatively straight line should bear fruit in that window”. That strikes me as as good a guess as any, which is sobering, because it’s a reminder that we can barely guess at the implications of AI beyond about 7 years.

Within that window, we can already envision many applications for AI, in fields ranging from agriculture to zymurgy1. The benefits could be dramatic, if we can chart a path that minimizes risks without unnecessarily impeding progress. In this post, I’ll sketch the main areas of known potential, highlight some important problems that AI is not likely to help with, and touch briefly on the questions of regulation and impact on workers.

Advancing Science

In many domains, the current frontier of science lies at a point where we are confronted with a system that is extremely complex, and difficult to understand and make predictions about, but where we have a large amount of data. The “deep learning” techniques behind much of modern AI show great potential to enable progress by finding patterns in the data.

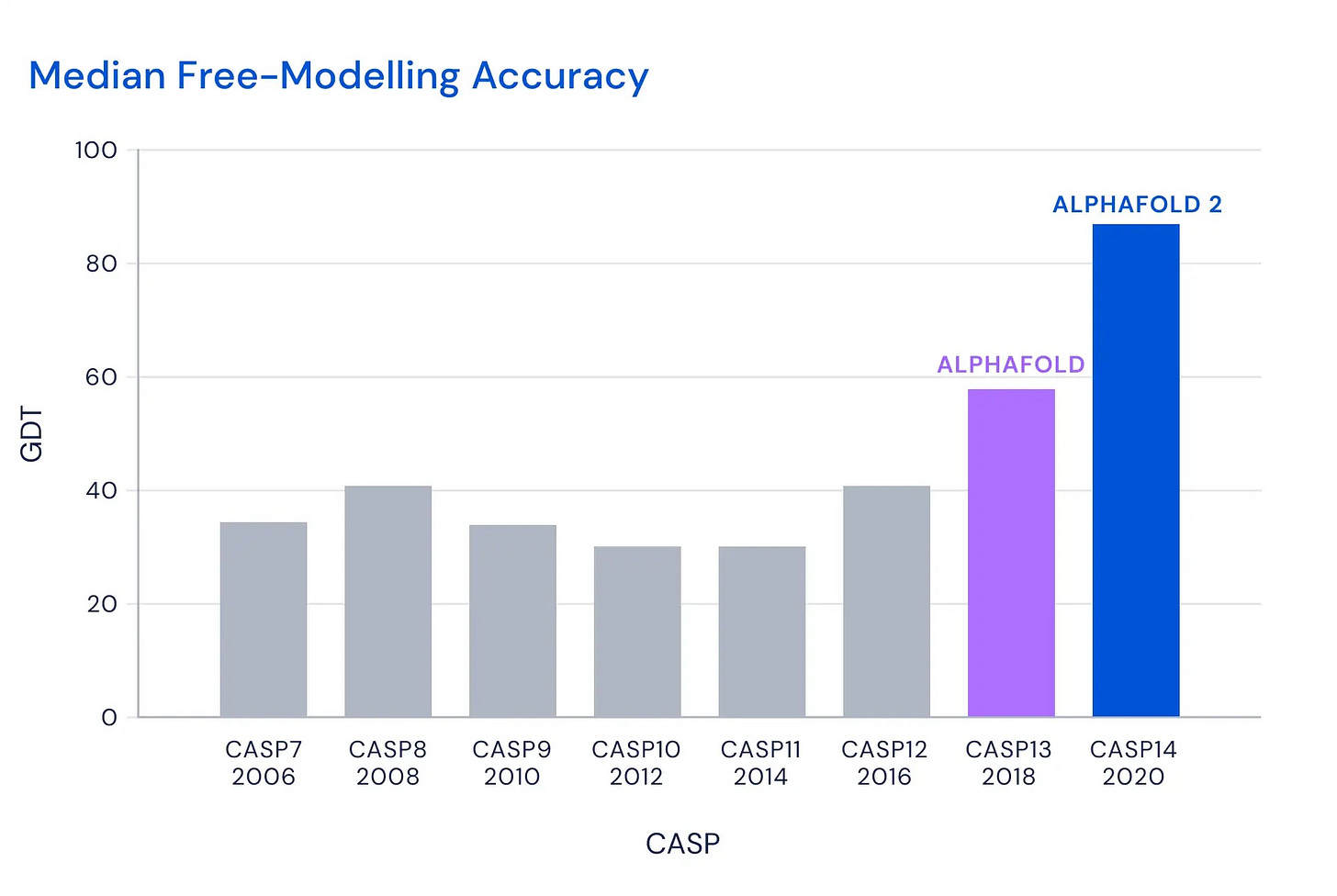

For instance, in biology, DeepMind’s AlphaFold represents a massive leap forward in the field of protein structure prediction: given the sequence of amino acids which make up a protein, predict what shape the protein molecule will take. Because proteins are at the heart of all biology, understanding their physical structure is hugely important. Scientists have been working on the problem since the 1960s, with limited progress until recently. The following chart shows results in a competition held every year or two, showing essentially no progress until AlphaFold bursts onto the scene:

Biomedicine offers many other such problems: under what circumstances do our cells express different genes? How do proteins react with one another? How does this interact with diet, the microbiome, and other environmental factors? In disease, how does it go wrong? In each case, we have (or could potentially collect) massive amounts of data, but struggle to make sense of it.

The potential is not limited to biology and medicine. In material science, neural nets might be able to design new and more efficient enzymes for chemical synthesis, new battery chemistries, pathways for recycling plastic or creating structural materials from captured CO₂. For fusion, deep learning might be able to refine plasma confinement designs. Process optimization yields applications ranging from reducing energy use for data center cooling to optimizing the schedule for cleaning dust off of solar panels.

Undoubtedly there are other possibilities.

Advances in these areas could have major implications: for medicine, climate and the environment, and other sectors. However, caution is warranted. It’s difficult to take a result from the lab and get it to work in the real world. This is especially true of medicine; history is cluttered with new drugs and therapies that seemed promising in theory, but struggled to bear fruit in practice. I am optimistic that AlphaFold and other deep learning techniques will yield important advances, but I also expect that progress will be scattershot and gradual.

Eliminating Scarcity of Access to Expertise

Let’s talk about customer service. (Don’t groan; I bring good news.) Cable companies, airlines, and other large businesses make it hard to talk to a person, because if they made it easy, they’d have to hire more support agents. As a result, we hesitate to even seek out help. LLMs might be able to usher in a world where you could be instantly connected to a competent (virtual) agent, via text or voice, at any time.

Sure, it’s easy to imagine companies getting this wrong, throwing together slapdash bots that aren’t really very helpful, or programming them to waste your time trying to sell you on additional services2. But I believe it could be done well, and I have a fantasy that commercial pressure will push companies in that direction. It should be pretty feasible for an LLM to draw on a rich database of past problems and solutions, while simultaneously using context clues to understand whether you need a long explanation or just a quick statement of facts and options.

The really interesting applications could be in areas where we wouldn’t even think of having “customer support” today. Imagine if a jury duty summons linked to an agent that could immediately explain how the rules apply to your college-aged child who sort of still lives at home, but mostly lives in a dorm room in another state3. Or if enterprise software packages came with agents that would explain which third-level menu you need to use to accomplish a certain task. There are so many situations where we have to interact with a confusing product or system, where a support bot that was capable of understanding specific circumstances – even if it wasn’t perfect – could be so much more helpful than a tired FAQ.

But the potential for LLMs to provide advice and assistance goes far beyond customer service. When you have a medical question, it’s hard to get access to your doctor – or any doctor, for that matter. You often have to go in person, if you can even get an appointment, which is difficult on short notice. (This is especially maddening for the little questions which come up so often, such as confusion around when you’re supposed to take a certain medicine, or wondering whether a symptom is worth following up on.) Once you do get into their office, they usually can’t spend more than a few minutes with you. Sometimes you can at least talk to an “advice nurse”, sometimes not. (I’m describing the situation in the US; I’m not sure about other countries.) ChatGPT has already demonstrated the ability to give strikingly good medical advice much of the time – despite not being designed to do so – and frankly it is a much better communicator than many harried medical professionals. A recent report in JAMA Internal Medicine took questions asked on Reddit’s “r/AskDocs”, and compared answers from volunteer physicians with answers from ChatGPT (source). ChatGPT’s answers scored much higher on both quality and “empathy” (as judged by human evaluators), and of course with ChatGPT you get a guaranteed instant response. Of course, there are issues with relying on ChatGPT for medical advice, but it’s still early days, and solutions should only get better from here.

For mental health, the situation is at least as bad as in medicine. The cost of therapy is too high for many folks, or they may only be able to afford one visit per month. Even if you can afford it, it can be hard to find a therapist with room for new patients. To address these issues, a variety of startups are working on providing mental health services using LLMs.

Then there’s education. School districts can only afford a certain ratio of teachers per student; we can’t give each child much individual attention. It’s not yet clear how AI can best enter the picture, but there are many possibilities, from interactive textbooks to virtual tutors and teacher’s aides to straight-up automated classrooms (physical or virtual). It seems to me that this could go pretty well or very badly, depending on how we choose to deploy the technology. There is a lot of room for education to be handled better using the technology we have available today, so it is far from guaranteed that we will make good use of AI, but the possibilities seem strong.

Over time, I’m sure we’ll find more applications in the “access to experts” category. Travel planning? Career counseling?

Increasing White-Collar Productivity

Many applications finding their way into the market today fall into this category: tools for writing code, emails, and marketing copy, generating illustrations (including most of the images I’ve used in this blog), and so forth. These tools don’t seem likely to take over jobs outright, but they can automate specific tasks – often, though not always, the more tedious ones – allowing workers to be more efficient. Over time, we may find more and more such applications. For instance, a recent New York Times article describes doctors using GPT-4 to edit their messages to patients (the results were much easier to understand and came across as much more compassionate), summarize medical histories, and write arguments to insurance companies.

A lot of “back-office” work may also be amenable to partial automation: data entry, secretarial and receptionist jobs, low-level legal, finance, and journalism work. The list probably includes a lot of more obscure jobs that I wouldn’t really know about… say, processing insurance claims, or planning logistics for a mining operation.

Then there’s a long list of automatable tasks that traditionally might be done by a good executive assistant, if everyone had one. Taking good meeting notes, summarizing documents and emails, writing polished emails, and so forth.

Finally, ChatGPT appears to be a “better Google” for many questions: better at taking context into account, avoiding spam, and cutting out the fluff that many websites add to give them more “content” and thus more room for ads. It’s still early days; ChatGPT’s question-answering capabilities will only improve, helping make everyone more productive.

(In the past, of course, advances in tech have conspicuously failed to show up in productivity statistics. I’ll discuss this below.)

Physical-World Jobs

Today, applications of AI in the physical world are relatively limited. Roombas, some factory automation, and so forth.

Human-competitive robot bodies seem to still be a good way off4. Until we get there, I think physical-world applications will remain confined to niches, but we may see the list of niches grow substantially. Self-driving cars and trucks, retail self-checkout stations that actually work well, warehouse automation, delivery robots, all seem plausible in the coming years. Progress will likely be gradual, and initially confined to specific use cases; for instance, we may see trucks that are only capable of fully independent operation on the freeway, and have to stop outside of town to hand over to a human driver.

One interesting possibility is precision agriculture: the idea of fine-tuning farming to precise local conditions. Not just field-by-field, but plant-by-plant. For instance, adjusting the amount of irrigation and fertilizer used on each square meter of land, according to the soil composition, microclimate, and so forth. This requires gathering high-resolution data (for instance, using drones), processing that data to come up with an optimized farm plan, and then having the necessary automated equipment to adjust conditions at each location.

Problems AI Can’t Solve

To put things in perspective, it’s not clear that AI – that is, AI short of the transformative “do everything people can do, only better” future that I’m not discussing here – can do much to help many of the big problems of our day. To name a few: housing shortages, traffic jams, nuclear weapons, crime, drug addiction, poverty, political polarization and governmental dysfunction, climate change. AI will nibble away at a few of these; for instance, progress in materials science and precision agriculture could bend the curve on climate change a bit; we might invent better drugs to help fight addiction (a la methadone); AIs monitoring security cameras might help fight crime.

But the solutions to these problems lie mostly through better policies. AI can’t help with that: we’re not going to delegate solving politics to AI. Matthew Yglesias discusses this in I'm skeptical that powerful AI will solve major human problems; here are some snippets:

Do we need a technological breakthrough to fix [traffic jams]? Will AI help?

I doubt it. Not because AI couldn’t help (it clearly could), but because there are well-known existing technological solutions that aren’t being implemented because the politics is difficult.

And that’s true across many domains of American life — the biggest roadblock isn’t a lack of technology, it’s a lack of implementation and followthrough. So more and more of our innovative energy has been poured into the narrow zone of software development, to the point where people have started using “tech” to mean “computer stuff,” even though flying to the moon and nuclear fission and many other landmark technological breakthroughs during the era of faster productivity growth had nothing to do with computer stuff.

I think the brute fact is that no matter how useful AI may be to the scientists and engineers of the future, creating a world of material plenty requires political solutions, not just engineering ones. If elevators didn’t exist, people would say that inventing elevators could solve housing scarcity [emphasis added]. In the real world, we know that multifamily housing is simply illegal on the vast majority of America’s developed land and that even where it is legal, its scale and quantity tend to be sharply limited.

The Challenge Of Regulation

Some important potential applications fall into heavily regulated fields; notably, medicine and mental health.

Over-regulation has long been a problem in medicine. Excessive licensing requirements, a deliberate insufficiency of training programs, and other factors limit the supply of doctors. Obtaining approval for a new drug is notoriously costly, slow, and difficult. From the Matthew Yglesias piece I cited above:

I’ve read so many non-specific accounts of how AI is going to help us cure all kinds of diseases and do other miraculous things. … But the field of medicine is hobbled by problems like almost no medications being in the top pregnancy safety tier, including the most commonly used medication for morning sickness. Why is that? Well, it’s because to be classified as Category A, a drug has to have undergone clinical trials in pregnant women, and it’s basically impossible under the current canons of medical ethics to organize such a clinical trial.

It seems inevitable that important benefits of AI will be held back by regulation. At the same time, the many potential risks that AI raises means that we must be more careful than ever not to throw the regulatory baby out with the bathwater. LLMs can hallucinate inaccurate medical advice; slapdash “therapist bots” could have all sorts of unintended consequences.

Ideally, rather than simply reducing regulation, we would find ways to improve it. There is ample room to provide more safety with less friction than today’s overly complex, overly rigid, process-oriented approach.

Will Economic Growth Accelerate?

Famously, the entire sweep of the modern computer industry – from PCs, through the Internet, and into the present day of ubiquitous connectivity – has had no visible impact on productivity metrics. My understanding is that it’s controversial whether this is real; for instance, it could be that productivity was otherwise set to fall (e.g. due to increased regulation, and the low-hanging fruit of the Industrial Revolution having already been plucked), and tech is compensating for that. Still, the persistent failure of the tech age to show productivity increases is striking.

In Could AI accelerate economic growth?, Tom Davidson notes that economic growth has accelerated drastically when viewed on a time scale of centuries, and argues that this is because the overall amount of effort invested in R&D keeps increasing. In the period I’m discussing in this post, we could see several effects that further increase overall R&D:

Specialized AIs like AlphaFold are in effect performing R&D directly.

General productivity enhancements (such as AI executive assistants) will allow human researchers to accomplish more.

Increased researcher productivity may make it worthwhile to employ even more researchers.

Improvements in education (and, especially, access to education) could increase the size of the talent pool.

Perhaps this will finally overcome whatever mysterious forces have been responsible for the lack of productivity gains in recent decades.

Implications For The Labor Market

It seems likely that we’ll see AIs taking over significant parts of many jobs – mostly white-collar, but also some jobs in the physical world.

The implications may be complicated. The price of many goods and services should go down, to the benefit of consumers, even as profits increase. People who hold onto their jobs may be able to command a higher salary; for instance, we’ll need fewer low-level customer service agents, and more supervisors of customer service bots. However, many people will have to retrain for new jobs.

Unlike past transitions, the impact will fall heavily on white-collar workers. As the labor market shifts and the population ages, it may turn out that most of the new jobs lie in the physical world: nursing, plumbing, so forth.

It’s also possible that the overall demand for labor will decrease, which could impact wages and generally decrease the importance of labor relative to capital. However, this trend may be counterbalanced by demographics, which in many countries points toward a shrinking pool of working-age people. Given a shrinking labor pool (and growing population of elderly needing care), increased automation could actually be a lifesaver. Unfortunately, many of the jobs created by an aging population – such as nursing and home care – may not be very amenable to automation.

Writing This Post Made Me More Excited About AI

When I sat down to write this post, I was feeling strangely “meh” about the useful potential of AI. All I could think about was bots writing marketing copy, obnoxious personalized advertisements, and new drugs that probably won’t work (because most new drugs don’t work) and wouldn’t get approved if they do.

The more time I spent writing, the more optimistic I became. I don’t think AI will bring world-changing benefits in the next few years, but I do think we’ll see many noticeable gains: in economic productivity, medicine, chemistry, education, and others; to say nothing of the innumerable small conveniences in everyday life, from instant customer service to automated meeting notes.

Just as money can’t buy happiness, the economic and other benefits of near-term AI won’t directly address the fundamental woes of society, from housing shortages to drug addiction. However, if we can restore a sense of progress, if incomes start rising across the board and people feel more hope for the future, then we may be able to summon the will to address these entrenched issues.

Undoubtedly there will be many applications of AI that we can’t yet foresee. Just as I was drafting this post, Henrik Karlsson proposed that LLMs could serve as community moderators for online discussion forums. As models evolve and we learn more about their capabilities, novel uses will emerge.

None of this takes away from the fact that AI poses many risks; we mustn’t charge ahead blindly. And ill-designed regulations, including existing regulations in fields such as medicine, will retard progress. The question we should be asking ourselves is not “is AI good or bad”, but “how can we best attain and distribute the rewards while minimizing the risks”?

What applications have I missed? What should I be more (or less) excited about? Let me know!

This post benefited greatly from suggestions and feedback from Avi Zenilman, David Glazer, Roy Bahat, and Sérgio Teiхeira da Silva. All errors and bad takes are of course my own. If you’d like to join the “beta club” for future posts, please drop me a line at amistrongeryet@substack.com. No expertise required; feedback on whether the material is interesting and understandable is as valuable as technical commentary.

Zymurgy: “a branch of applied chemistry that deals with fermentation processes (as in wine making or brewing)” (Merriam-Webster). AI-driven advances in fermentation processes are an active area of practical research.

Ok fine, I didn’t know what “zymurgy” meant either, I got it from ChatGPT, you caught me. Prompt: “What are some sectors of science and industry whose name begins with 'z'?” You try to come up with a word to pair with “agriculture” without AI assistance. At least I double-checked to make sure it wasn’t a hallucination.

Also insisting, insisting, that you Have A Nice Day, because Your Business Is Very Important To Them and They Care About Ensuring You Have a Great Experience. Why are support agents always forced to talk like this? Is anyone actually swayed by it?

Obviously this is just a hypothetical scenario, and has never arisen for the author.

Yes, I’ve seen those Boston Dynamics videos, but everyone says they’re highly cherry-picked, and you don’t see them doing much with their hands. Hands are hard.

I’m quite excited for the near-term ways AI augment/enhance individuals. I personally get a lot of value from GPT in its current form. One of my favorite use cases is to give it a problem/situation along with the things I’m considering and ask it to give me additional areas/risk/considerations I may have missed. While a majority of the output is predictable I find it will usually find one or two things worthwhile that I would have otherwise missed.

GPT itself being useful I look forward to it having a bit more...agency (for lack of a better term) to speak up on its own. As an example if I have a particular goal I’m trying to achieve, say lose weight, and I frequently stumble on my discipline in the evenings with overeating (this example is starting to hit too close to home 😅) it can craft a message using its understanding not only my habits/tendencies but also draw on its body of psychology “knowledge.” Even in small percentages of effectiveness this could be meaningful enchantment.

I can think of a few others in a similar vein and honestly this doesn’t take any additional AI advancement just using the software systems surrounding it more cleverly.

These types of augmentations raise the usual suspects of concern. Specifically on my mind is skill atrophy -- even for myself and even in the near term. I find myself allowing Copilot write a full function for me which, from experience, requires vigilance to ensure it’s doing what you actually want and not in some fraught way. Humans suck at vigilance over the long haul and AI us _just_ reliable enough to trust it.